Redefining UX Design for Generative AI Models in Enterprise

by Jay Mandal, Codex Fellow and Dr. Megan Ma, Codex Assistant Director

Since the end of 2022, the explosion in interest in Generative AI models (such as Large Language Models) can largely be attributed to two factors: (i) product performance – specifically, the gigantic leaps in the quality and sophistication of outputs to queries to these models, both in text and image formats; and (ii) the UX – a user-friendly, chat bot that has allowed users to easily converse and see the benefits of these models.

In the past year, there has been an emphasis on investment on research and development to improve the product performance of generative AI products by both large technology companies and startups. However, development of UX design for these products has lagged behind. UX, simply defined, is how well and how easily a user can navigate a product and find what they need. The treatment of UX development as a second class priority is leading to an impediment for users in realizing the full benefits of these generative AI technologies. These are just a few examples of major developments in generative AI technology that will require a rethinking of UX beyond a text-based dialogue with a chat bot to manage the needs of users:

- Multimodal capabilities – using not only text, but voice, vision and other media, as both inputs and outputs

- Integration of generative AI models – through APIs or homegrown models – into different steps of an enterprise workflow for customers

- No code/low code approaches to create and use generative AI models in different subject areas (such as Open AI’s newly launched GPTs, which can be created by users without technical skill sets)

Focusing on the enterprise space, generative AI models are also at the stage of evolving from a novelty technology to one in which small and large companies are investigating how to adopt them for the benefit of their customers in areas like legal, medical, and software/SaaS businesses. These companies are not just concerned about the product performance, but how to simplify the way these solutions can be easily used and adopted by their customers in various scenarios and workflows. Their UX needs may go beyond the simple text-based chat interface and intermittent dialogue on this interface.

In this paper, we propose key UX design principles for generative AI solutions for users to best avail of the latest and the anticipated future product developments, with a focus on user needs in the enterprise space. Each of these UX principles are organized into the following framework for an overall UX workflow of a generative AI solution: input (instructions), process (using knowledge/information and calculations), output, and organize/iterate.

1. Focus on Voice (Input and Output)

A voice-based interface would be the most flexible and accessible interface to take full advantage of the multi-modal generative AI model capabilities. Verbal communication and dialogue remains an abstraction of actual thoughts and commands, and is the most comfortable means for humans to express their intentions (in comparison with written text or inputting images).

Other inputs, such as text, vision and sound, would be important to supplement these initial verbal instructions. However, human voice would be the primary means of communication and organization of other inputs. For example, a user would verbally provide instructions upon a prompt and ask the model to incorporate other inputs as necessary (such as adding documents or pictures).

For outputs from a generative AI model, voice would also be the primary way a model would communicate and summarize the results of a query. If necessary, the model would direct the user to another medium to provide supplemental information, such as more detailed textual information or point to a picture or sound output.

Linguists have been reflecting on conversation and notions of communicated meaning through the branch of pragmatics. Pragmatics is largely regarded as extra-linguistic considerations relevant to conversational appropriateness. There is a powerful analogy to be made between conversation and how to engage with LLMs via dialogue. Predating the explosion of LLMs in the computing world, there has been cognitive dissonance between human and machine communication owed to existing programming paradigms. This limited the target user to uniquely technical communities well-versed in these programming languages. Interestingly, cognitive scientist and computer programmer, Evan Pu, noted, the ability to conduct programming tasks in natural language bridges the gap in reasoning between humans and machines.

Therefore, a voice-based interface would democratize access to this technology by allowing users at various literacy levels and of all languages (with language conversion technology) to interact with a solution. It also simplifies the interface, such that a user doesn’t need to choose which would be the primary medium to interact with a model. For example, OpenAI recently introduced Whisper V3 for improved speech recognition across languages and the Text-to-speech (TTS) interface that would facilitate better voice-based interactions.

From a hardware perspective, the use of voice also frees the UX from a keyboard or phone-based interaction with generative AI models. It would allow the user to interact with a wide array of different hardware form factors, such as glasses, ear pieces, phones/laptops, watches, mobile devices, speakers (like Alexa, Google Home), etc. The supplemental information – such as text, picture and sound – could be input or displayed on a wide array of hardware, but not drive the user experience.

2. Simplified Inputs (Input)

Interacting simply with generative AI models has become complicated by the idea of prompt engineering. Simply put, prompt engineering is the process of providing context into generative AI models to better curate results. A whole cottage industry of prompt engineering-driven projects/startups has arisen to determine how to most consistently input logic and code into commands, add supplementary/proprietary information, and recommended outputs from generative AI models. In the enterprise space, this has further splintered into various enterprise specific solutions that also put a heavy emphasis on prompt engineering (e.g., for sales, customer service, legal, medical, etc.)

The goal would be to abstract away from these unnecessarily complex prompt engineering techniques. In this new UX design, a user would be able to use natural language conversation to prompt, or instruct, a generative AI tool. In the background, the model would need to interpret a person’s verbal command into prompt engineering in a way that delivers the output desired, and in a more transparent and replicable way. As needed, the voice functionality would engage in dialogue with a user to clarify the user’s intention, such as with questions or additional inputs (e.g., voice, text, vision, or other inputs). The model would also take into account implied knowledge from past interactions with the user, such as from the prior dialogue and also past commands, to clarify the context of these commands.

Humane’s AI Pin is another example in the market of a voice-first UX that allows a user to provide instructions into a wearable, portable AI device. AI Pin, along with Open AI’s new voice-driven GPT creation interface, exemplifies this idea of enabling a user to provide simplified verbal input commands that are interpreted by the model to then provide the recommended outputs to the user.

3. Sessions: Search and Retrieval of Stateful Sessions (Output)

Human thinking is often a non-linear progression, bouncing between ideas and intervening thoughts, and co-mingling concepts in real-time. This would be the mental model behind this UX principle. First, the voice interface would allow for the ability to engage with stateful sessions — or a user’s prior dialogue with the model that remains in memory and is retrievable by the generative AI model – and would be able to bounce between these sessions and integrate thoughts from these sessions seamlessly. The model would allow the user to identify a prior session based on verbal cues – for example, a user could summon prior sessions based on either time periods or verbal descriptions of a prior session. Under this stateful system, all of these prior sessions would be ready for immediate retrieval, search and display/summarization for the user.

We anticipate the development of stateful sessions as a future offering in generative AI models. For example, recent announcements from Open AI recognized their users’ desire for a stateful system, and that certain features of ChatGPT 4.0 will retain and retrieve the prior history of a user’s conversations with the AI solution as “threads,” such as in the recently announced AI Assistant product. This example of a stateful system will become more ubiquitous, and is only the beginning of more complex interactions between a user and the generative AI solutions of the future.

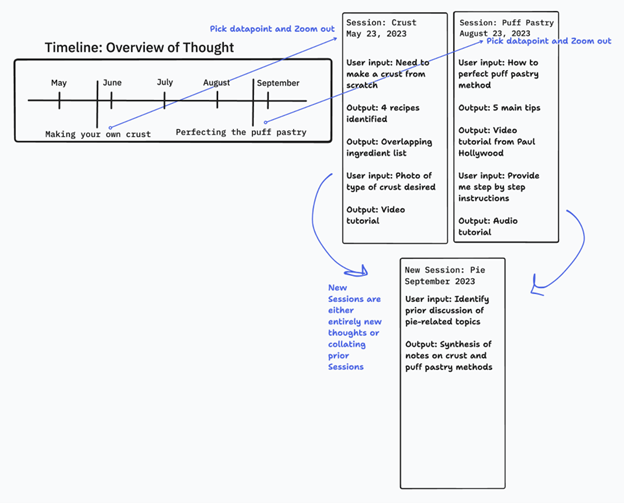

Figure 1.0 provides a wireframe of the possible UX design of how a user would interact with our proposed stateful sessions. In this case, a user has a comprehensive timeline of all sessions with a generative AI model, which can be verbally retrieved by either the timestamp of the session or a description of the conversation in that session. In this example, the user is an avid baker who intends to make a pie for Thanksgiving. The baker needs to recall prior sessions they had with a model regarding all conversations related to activities involved in baking. The idea is that humans frequently draw references from memory and collate this information in new contexts. When a user recalls prior sessions, the sessions can either be verbally summarized to a user, or can also be visually displayed on an interface for the user (agnostic to the interface, so it could be a computer, tablet, or phone) as shown in Figure 1.0. These prior sessions could also be retrievable based on day and time as well – for example, the user could ask “pull up (or summarize) all the sessions I had yesterday.”

Image Caption: Figure 1.0 Stateful Sessions: Search, Retrieval and Iterations

4. Iterating on Prior Sessions (Organize and Iterate)

The human brain retains prior conversations, summons those ideas as needed, and builds upon those prior ideas to create new ones. We think future developments in generative AI models will extend these natural human thought patterns as part of this new user paradigm of stateful sessions. Building on the ideas from section 3 above, a user would be able to access prior sessions with a generative AI model to iterate upon prior ideas, regroup ideas, or even reframe ideas from these sessions in order to create a new dialogue with the model.

More specifically, as a first step a user would summon these prior sessions, based on either a verbal description of the topic or based on a timestamp of sessions from a specific time period. The user would then instruct the model to re-engage with, and iterate, upon a specific idea(s) from a single or multiple prior sessions. The user would then engage in a new dialogue with the model in this newly created session. The medium to initiate these actions would either be verbal commands to dialogue with the model, or interact on a visual interface (such as phone, tablet, or computer) in case the sessions are numerous and more complicated.

Consider again the example of the avid baker in Figure 1.0. The avid baker has already retrieved all prior sessions based on an initial verbal prompt “remind me of all the prior recipes we discussed related to making a pie.” The user can then either hear a verbal summary or see a visual rendering of those prior sessions regarding “Making your own Crust” or “Perfecting puff pastry” on a phone, computer, tablet, etc. The baker can then choose to take an idea from either of those prior sessions to continue dialoguing and refining their thinking. Or, in a new third session, the baker can synthesize and merge together the ideas from both “Making your own crust” and “Perfecting a puff pastry” sessions to create one on “Making a pie from scratch.”

This replicates the flexibility of the mind in organizing and iterating ideas. One of the core strengths of generative AI models is their ability to retrieve and organize an unlimited number of sessions, unlike humans that are capable of retrieving only a limited number of past memories in an organized state. It is also fully immersive, in that a user is interacting with the current session, drawing from prior sessions (or memories), and also incorporating multi-modal inputs to supplement the conversation (such as voice, text, pictures and sound). This replicates the human mind in that we think about prior memories, but incorporate all the current sensory information to refine our current thinking (what we see, what we smell, and other inputs).

5. Integrated Feedback Loop (Output, Input, and Iterate)

Since a user cannot exactly replicate their intentions when communicating to a generative AI model, the feedback loop involved in this UX design is also important to help draw out a user’s implicit goals. Since voice is the primary interface, the UX should allow for a dialogue between the user and the model to clarify the input or output required.

For example, when a generative AI tool produces a verbal output, it either: 1) would provide a definitive output and occasionally asks for optional feedback/clarification (e.g., “how did you like this answer?”; or 2) would provide a directional output if the request is unclear, and ask clarifying questions in specific areas to refine the search to better meet the user’s needs. This would serve two purposes. It would ensure that the tool gets closer to the original intention of the user’s initial instructions. It would also provide data to the model to understand the user’s implied thinking when asking future questions, and create a database of information to reference for future similar inquiries. The frequency and type of communication for these requests for feedback would be informed by the behavior of individual users – i.e., some users may be more receptive to providing feedback.

The feedback loop for a visual interface for generative AI models has already been tested by various current LLM models. For example, in the case of Open AI, ChatGPT can receive human feedback by presenting two competing answers for a user to choose from. Open AI also presents the thumb up and thumbs down feedback choices to users to rate the outputs produced. These are rather simplistic ways to attain user preference/feedback more geared to training the models. A way forward would be more personally customized, substantive dialogue with a user about their satisfaction with an answer, which would reference specific details and questions about the output provided.

6. Transparency via Auditability

A user would want transparency of insights into their prior sessions with a generative AI model in the form of auditability. That is, both their input and outputs into the model in each of these sessions are traceable.

We consider the transparency and auditability of a user’s inputs in line with the new UX paradigm we have proposed. As discussed, a user should have a full scope of search, including all dialogue between user and tool, which could be organized by sessions that are stateful and immediately retrievable.

As we move from prompt engineering to inputting simplified commands, there should be a traceable trail of the instructions inputted into the system (and also the iterations on outputs). The auditability of outputs for generative AI tools are still up very much at the frontiers of research. For example, traditional AI tools like ChatGPT, Bard, and Claude do not provide their sources of data. However, there are a rising number of specialized solutions that do so (e.g., Truepic, Arize AI). We see an opportunity for increasingly sophisticated forms of information provenance capable of identifying the primary sources that have led to the outputs generated.

Concluding Thoughts

As these generative AI solutions evolve, the following UX principles should be considered to unlock greater usage and adoption of these tools: (1) voice-first engagement; (2) simplified inputs; (3) search and retrieval in stateful sessions; (4) dynamic iteration on prior sessions (5) integrated feedback loops; and (6) transparency via auditability. These choices will help this technology become more accessible and useful to users, especially in the case of small and large enterprises as they embed generative AI solutions to meet user needs.

We consider this to be an exploratory effort. It is an initial brainstorm and sketch based on observations from recent developments and our lived experiences with these tools. We anticipate experimenting with this initial UX sketch as a next phase. For now, outstanding questions remain:

- Can UX be a moat for products in the future?

- What is a software / hardware prototype of this new type of UX interface? Are there differences depending on specific enterprise verticals?

- What other UX alternatives could replace chatbot assistants as the prevailing UX for integrated AI agents (e.g., Bard Extensions, Microsoft Copilot Suite, Humane AI Pin)