The Governance AI Life Cycle Core Principle and the NIST AI Risk Management Framework

The National Institute of Science and Technology (NIST) is a well-known, highly influential voice in the technology space. It has the ear of courts, regulators, and even international organizations. NIST regularly publishes reports, recommendations, guidelines, industry handbooks, and other resources that provide useful information on a wide variety of technical topics. Properly applied, this information can form the foundation for drafting legally reasonable policies, processes, procedures, and practices (the 4Ps).

When it comes to AI, the NIST AI Risk Management Framework (RMF) is a valuable resource for all AI actors* and other stakeholders, such as courts, regulators and legislators. The RMF’s focus is on enabling responsible development and use of AI systems. It is comprised of four basic functions: Govern, Map, Measure, and Manage. These functions, in turn, are broken into categories and subcategories containing a total of 90 tasks that enable the implementation of a responsible development and use environment of AI systems.

But what does responsible development and use of AI systems mean? This is a complicated question as there are multiple approaches to answering it. One approach is to implement and maintain the 4Ps that align with the relevant AI Life Cycle Core Principles (Core Principles). There are 37 Core Principles, but not all of them apply all of the time and to each and every AI system. Each organization needs to determine which Core Principles apply to its AI system based on what the system does, who it is for, what the system’s limitations are, the organization’s risk tolerance, etc. The RMF can also serve as a framework for assessing whether an organization is or isn’t operating in a responsible development and use manner. Organizations that don’t adhere to the RMF, or do so only partially, are at risk of not exercising responsible development and use policies processes, procedures, and practices.

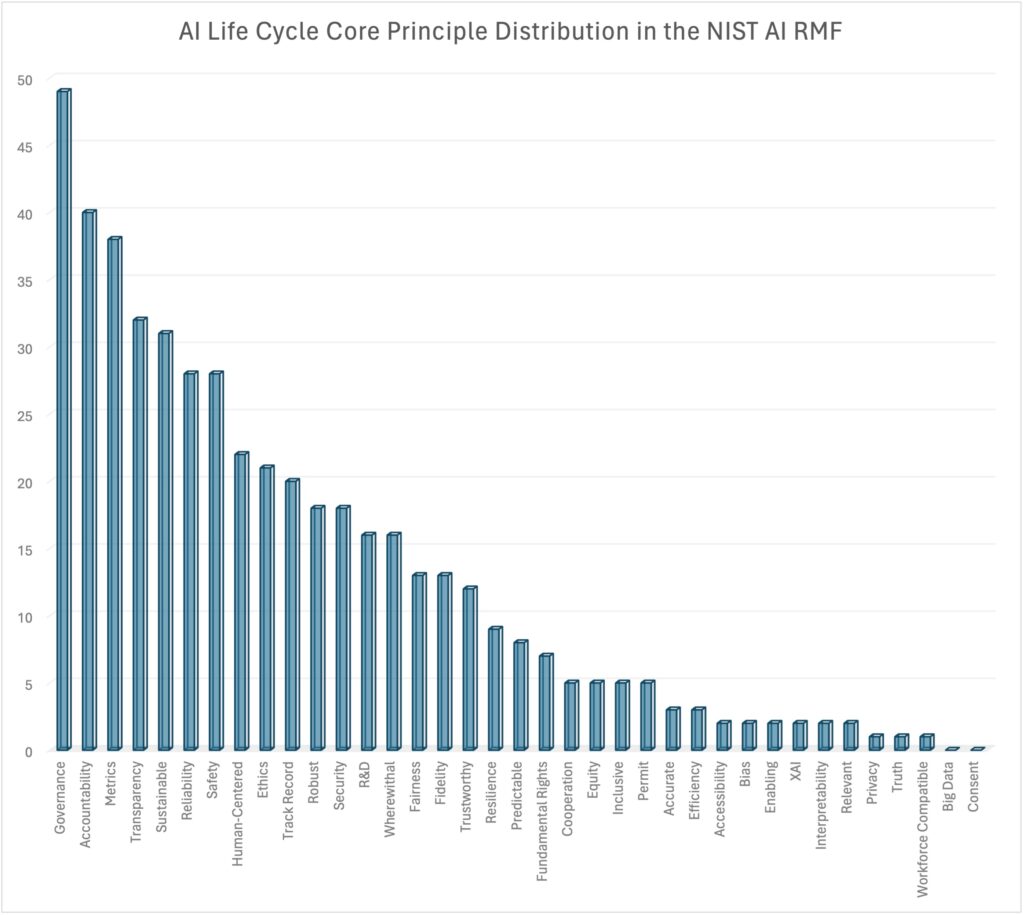

Organizations that desire to align their operations with responsible development and use of AI systems can benefit from understanding how the RMF and the Core Principles relate to each other. With that goal in mind, I set out to analyze all of the tasks contained in the RMF and tag them with the relevant Core Principle. During this process, the majority of the RMF’s categories and subcategories were tagged with multiple Core Principles. For example, the Measure Section 1.1 task was tagged with: Ethics, Fairness, Human-Centered, Metrics, Sustainable, Transparency, and Trustworthy Core Principles.

The tagging results are shown below. With more than half of the tasks in the RMF having a strong correlation to and depending on the Governance Core Principle, it is obvious that organizations need to pay special attention to it.

*The Organization for Economic Cooperation and Development – the OECD – defines “AI actors” as “those who play an active role in the AI system life cycle, including organizations and individuals that deploy or operate AI.”