Transforming Legal Aid with AI: Training LLMs to Ask Better Questions for Legal Intake

By Nick Goodson, Rongfei Lu, Guest Contributors

The A2J (Access to Justice) gap remains a significant problem, especially for people without a legal background who find the legal system complex and hard to navigate. This problem is compounded by the limited capacity of legal aid organizations and court centers, which heavily rely on human experts to provide assistance. These experts are often overwhelmed by the volume of cases, limiting their ability to offer personalized guidance. Furthermore, there exists a substantial legal knowledge gap, where clients lack the necessary information and understanding to ask effective questions and advocate for themselves. This gap between what clients know and what they need to know to navigate the legal system effectively hinders their ability to achieve justice and fair outcomes.

Large Language Models (LLMs) like OpenAI’s GPT-4 have the potential to reshape how legal aid centers and courts operate. These technological advancements have revealed a promising opportunity for reimagining how we interact with legal services, but they still lack the capability to bridge the client legal knowledge gap and proactively ask effective questions.

To address this problem, we demonstrate a proof of concept of an interactive chatbot that can:

- Identify the client’s main concern to address their most urgent issue.

- Ask probing questions to fully understand the client’s situation.

- Provide informative responses to clients while their cases are reviewed by attorneys.

Bridging the Legal Knowledge Gap

The main challenge in using AI for legal services lies in the difference between what clients know and what they need to know to ask effective questions – the kind that will help them get the best assistance. Without expert guidance or prior legal knowledge, those seeking legal help often struggle to provide a full picture of their situation. This may result in not clearly stating their real goals or omitting crucial details about their circumstances.

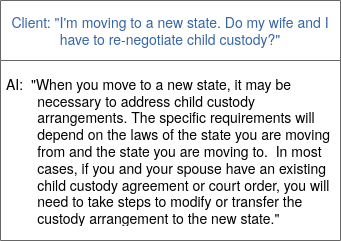

Take the fictional example of Lucy in San Jose, CA. She’s been notified of eviction by her landlord for having too many occupants. Desperate to prevent her family’s eviction and lacking legal knowledge, she turns to a well-known LLM chatbot. Lucy asks, “how can I stop my family from being evicted?” Yet, her question leaves out vital information, like her local laws and specific terms of her rental agreement, which are crucial for an accurate legal response. A human attorney would follow up Lucy’s inquiry with probing questions to better understand her situation. However, LLM chatbots, like the one Lucy is interacting with, have been specifically fine-tuned to answer questions regardless of the amount of information provided. When the model responds to Lucy, the answer is generic and ultimately not very helpful. Lucy tries to ask follow up questions to the chatbot, but isn’t really sure where to start, and each time the chat-bot answers confidently based on whatever information Lucy offers. (See another example from Figure 1)

A Novel Approach to Legal Aid

Recognizing these challenges, we set out to develop a new framework to adapt LLMs for more effective use in legal contexts. We built an interactive chatbot with two key objectives:

- Identify the client’s main intention to address their most urgent issue.

- Ask detailed questions to fully understand the client’s context.

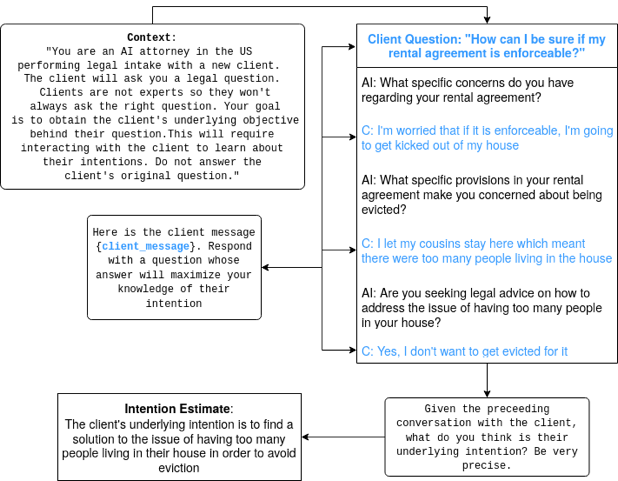

The first objective, intention-elicitation, involves guiding the chatbot to ask broad, general questions to understand the client’s needs.

For example, we created system prompts and used in-context learning for LLMs to generate questions that elicit client intentions. This ranges from clarifying the terms of a rental agreement to strategies for preventing eviction (See Figure 2).

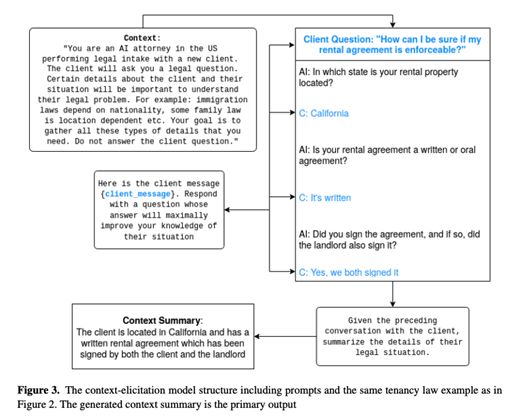

In addition, to provide accurate and relevant legal services, it’s important to know the specific details of the client’s situation. For instance, knowing the client’s jurisdiction is usually crucial. Often, these details are connected to contracts the client has previously signed. Similar to how we handle intention elicitation, our framework guides LLMs to ask questions that gather any missing information from the client (See Figure 3).

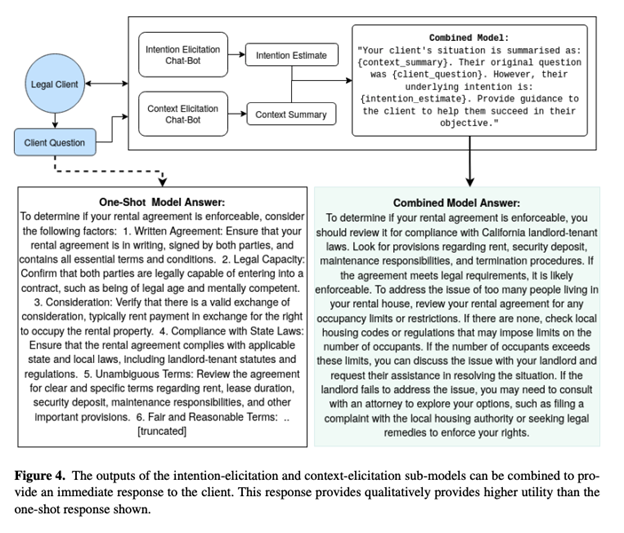

By integrating both the intention and the context elicitation components, we’ve created a tool that can better understand a client’s needs and objectives. When we combine the extra context gathered with the client’s initial query, LLMs can produce responses that are both more accurate and relevant. In our example, the model successfully identifies the client’s primary goal (to avoid eviction due to having too many occupants) and uses the relevant context (living in California, signed rental agreement etc.) to generate much more precise and applicable answers (See Figure 4). This combined output could then be combined with a document retrieval step to providing relevant sections for the client to review.

Risks

From detailed user research in using LLM for legal questions, Margeret Hagan [1] pointed out that there is a tendency for clients to over-rely on information provided by LLMs, despite its potential inaccuracies from hallucination. In a production environment, it is essential for responses generated by LLMs to be reviewed by attorneys. This step not only addresses issues of model hallucination but also adds a necessary human element for clients. It may also help mitigate concerns about the unauthorized practice of law.

Additionally, clients should be informed about and consent to the use of AI, with a strong emphasis on the protection of their privacy. Following the ethical use of AI guidelines by the California Bar Association [2] and American Bar Association, real client data and personal information should not be used to train AI models. Such AI tools should also comply with California Consumer Privacy Act (CCPA) and other applicable data privacy protection laws to ensure client privacy.

The Road Ahead

The framework we presented here is an initial exploration into the use of intention and context elicitation with LLMs in legal intake, aimed at advancing access to justice. The next steps involve conducting experiments and ablation studies with both machine and human evaluators. These studies will compare the effectiveness of LLMs that solicit intention and context against baseline one-shot answers.

In the future, we aim to use the datasets generated from intention and context elicitation conversations with LLMs to train an interactive conversational agent. This agent will optimize goal-directed objectives over multiple interactions, using methods such as supervised fine-tuning or offline reinforcement learning. We also plan to conduct experiments to showcase potential performance enhancements over standard zero-shot answers. Furthermore, we intend to release LLM-generated conversation datasets, verified by attorneys, that encompass various personas and issues pertinent to the most critical legal domains in the access to justice space.

Conclusion

Large Language Models (LLMs) have the potential to drastically change the landscape of legal-aid. Here we introduced a proof-of-concept utilizing LLMs to gain a detailed understanding of clients’ situations and elicit their underlying intentions. We showed how this information can be used as input to an LLM to generate qualitatively better final responses without the need for additional fine-tuning.

We propose that LLMs equipped with the capability to actively solicit client intentions and relevant context can significantly enhance access to justice by improving the efficiency of legal intake and triage processes in legal aid and court centers.

Read our full paper published at Jurix AI & A2J workshop: https://arxiv.org/abs/2311.13281

Visit our website: https://www.myparaply.org

About the Authors:

Rongfei is the co-founder and CEO of Paraply, a tech non-profit developing an AI-driven legal Q&A marketplace catering to underserved communities. Accessible 24/7 across various platforms, it bridges the gap between legal assistance and those in need.

Before Paraply, Rongfei led global marketing privacy products and legal compliance at Uber. He is also an AI researcher and has published and reviewed at top AI conferences, including NeurIPS. Rongfei received his Bachelor’s degree from Dartmouth College and Master’s degree from Stanford University.

Nick is the co-founder and CTO of Paraply. Before Paraply, Nick led the development of the autopilot software systems for New Zealand’s America’s Cup race yachts. Nick received his Master’s degree from Stanford University.

Citation:

[1] Hagan M. Towards Human-Centered Standards for Legal Help AI [SSRN Scholarly Paper]. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences. 2023 Sep. Available from: https://papers.ssrn.com/abstract=4582745

[2] Committee on Professional Responsibility SBoC. Practical Guidance for the Use of Generative Artificial Intelligence in the Practice of Law. the State Bar of California; 2023. Available from: https://www.calbar.ca.gov/Attorneys/Conduct-Discipline/Ethics/Ethics-Technology-Resources