Navigating AI Vendor Contracts and the Future of Law: A Guide for Legal Tech Innovators

by Olga Mack, CodeX Affiliate

AI vendor contracts are more than just legal agreements; they are actively shaping AI governance, liability structures, and compliance standards long before formal regulations take effect. As AI becomes increasingly integrated into critical business and legal processes, vendors are setting the terms for risk allocation, data ownership, and legal accountability, often in ways that prioritize their interests. These contractual patterns provide valuable insights into how AI-related risks and responsibilities are distributed, influencing liability caps, indemnification limitations, and vendor claims over customer data. For legal professionals and innovators in AI governance, legal tech, and regulatory compliance, understanding these trends is critical to navigating the shifting power dynamics in AI adoption.

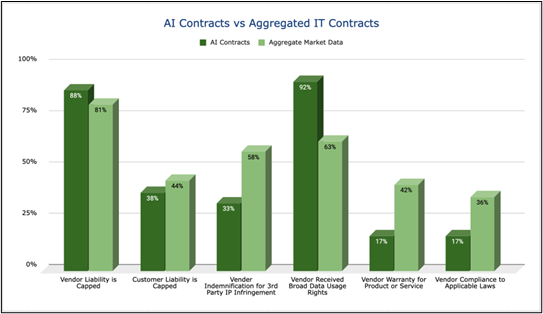

This analysis draws on data from TermScout, a contract certification platform that evaluates the fairness and favorability of tech contracts, providing an empirical basis for identifying key market tendencies. The latest data reveals that 92% of AI vendors claim broad data usage rights, only 17% commit to full regulatory compliance, and just 33% provide indemnification for third-party IP claims—all of which contrast sharply with broader SaaS market norms. By comparing a sampling of contracts from various AI vendors to traditional SaaS agreements, this research highlights distinct trends in liability limitations, indemnification practices, data usage rights, and regulatory commitments. As the legal industry adapts to AI’s growing influence, lawyers, regulators, and legal tech entrepreneurs can anticipate how these evolving contract norms will impact legal practice, contract negotiation, and risk management. Staying ahead of these trends is vital for developing AI-powered solutions that align legal frameworks with AI innovation.

Rethinking AI Liability: Managing Risk in an Evolving Legal Landscape

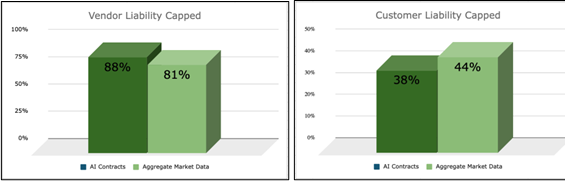

AI vendor contracts are redefining liability allocation, frequently capping financial exposure at fixed amounts that fall below industry norms. According to TermScout data, 88% of AI vendors impose liability caps, aligning closely with broader SaaS trends (81%), yet only 38% cap customer liability, compared to 44% in broader SaaS agreements. This imbalance shifts financial and legal burdens onto customers, possibly leaving them with limited recourse for AI failures—whether due to biased hiring decisions, flawed financial models, or security risks. As AI becomes more deeply embedded in high-stakes decision-making, this misalignment raises potential challenges for businesses and regulators, prompting calls for more adaptive risk-sharing models.

To address this, AI liability models could evolve beyond traditional software frameworks. AI’s autonomous and adaptive nature likely introduces risks that static liability caps fail to address, necessitating risk-adjusted liability frameworks based on AI’s level of control, customer input, and regulatory exposure. Legal tech could play a key role in developing contract analysis tools, risk quantification models, and AI liability insurance markets to fill gaps in vendor accountability. As courts and regulators push for stricter AI liability laws, legal professionals and innovators have a unique opportunity to shape fairer risk-sharing models, so that AI vendors assume appropriate responsibility while allowing innovation to thrive.

Warranties in AI Contracts

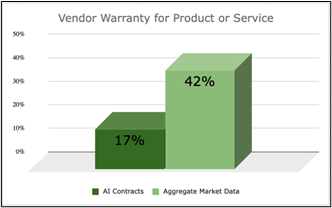

A significant oversight in AI vendor agreements is the scarcity of performance warranties. Only 17% of AI contracts in the study included warranties related to compliance with documentation, compared to 42% in SaaS contracts. This lack of protection possibly exposes businesses to risks if AI systems underperform or yield biased results.

To mitigate these risks, companies might consider linking warranties to specific performance metrics and reliability standards. High-stakes applications like compliance or fraud detection could benefit from robust warranties that include remedies such as model retraining or service credits for non-compliance.

Vendors argue that AI’s probabilistic nature makes rigid warranties challenging to enforce. To address this tension, tiered warranties based on complexity or insurance-backed protections could be explored. Aligning warranties with emerging governance frameworks may also provide a competitive edge as regulations evolve.

Balancing Data Rights, Privacy, and Governance

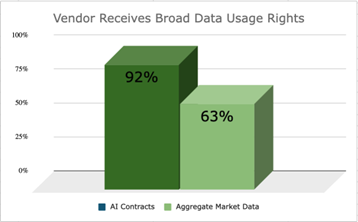

Data rights and privacy concerns are central issues in AI vendor contracts. According to TermScout data, 92% of AI contracts in the study claim data usage rights beyond what is necessary for service delivery—far exceeding the market average of 63%. Many contracts allow vendors to use customer data for retraining models or even competitive intelligence purposes.

These expansive rights could raise concerns about intellectual property protection and regulatory compliance under laws like GDPR and CCPA. Without clear limitations, businesses risk losing control over proprietary data or exposing themselves to fines for privacy violations.

Legal tech solutions can strengthen governance by providing tools to track vendor data usage, flag privacy risks, and enforce compliance. As governments introduce stricter data sovereignty regulations, businesses will need support navigating localization requirements and cross-border transfers. By developing privacy safeguards and proactive monitoring tools, legal tech innovators can help businesses retain control over their data while holding vendors accountable.

Navigating Compliance Challenges

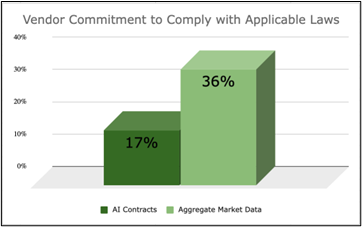

As global regulations evolve—such as the EU AI Act—businesses face growing compliance responsibilities even when relying on third-party vendors. Many AI contracts reviewed shift accountability away from vendors; only 17% explicitly commit to complying with all applicable laws compared to 36% in SaaS agreements.

This potentially forces companies to assume responsibility for bias mitigation and regulatory compliance even when using external systems. Legal tech solutions may be critical here by automating compliance monitoring through real-time tracking of global laws and contractual obligations. As mandatory audits become standard practice, legal tech innovators have an opportunity to develop tools that verify compliance while promoting greater transparency in decision-making processes.

Predictions for Legal Tech and AI Governance

As AI adoption expands, the legal frameworks governing AI liability, compliance, and transparency will undergo significant transformation. The AI vendor contracts studied favor providers by limiting liability and shifting compliance burdens onto customers, but this approach is arguably unsustainable as AI becomes deeply embedded in regulated industries like finance, healthcare, and legal services. In response, AI-specific liability models could emerge, moving away from rigid contractual caps toward risk-adjusted frameworks that account for AI’s role in decision-making and regulatory exposure. Governments and industry bodies will likely introduce sector-specific AI accountability laws, requiring vendors to assume greater responsibility for model accuracy, bias mitigation, and compliance.

Legal technology will be key in automating AI compliance and risk management, enabling businesses to navigate complex AI regulations across multiple jurisdictions. AI-powered contract analysis tools could increasingly identify liability clauses, assess regulatory risks, and provide real-time updates on AI laws to comply with evolving standards. As governments mandate AI audits and third-party accountability frameworks, legal tech platforms will facilitate automated compliance tracking, risk exposure modeling, and contract enforcement tools. AI contracts themselves will likely adapt to include bias audits, explainability requirements, and regulatory compliance guarantees, making contract negotiation and compliance tracking software essential for businesses and law firms.

Vendors that prioritize transparency, offer clear contract terms, and commit to stronger compliance measures could gain a competitive edge, driving demand for legal tech solutions that enhance AI accountability and enforce vendor commitments. As AI governance frameworks mature, legal professionals and businesses will turn to legal technology to bridge the trust gap, so that AI vendors are held accountable while enabling responsible AI adoption.

Shaping the Future of Responsible AI Adoption

AI is redefining industries while challenging traditional legal frameworks around liability allocation and compliance enforcement. TermScout’s analysis highlights potential imbalances in how risks are distributed between vendors versus customers—pinpointing opportunities where legal tech can drive change.

By creating tools that quantify fairness or automate compliance tracking across jurisdictions globally—tech innovators have an opportunity not just to adapt but actively shape future standards governing responsible adoption practices worldwide.

As trust-building becomes paramount amidst rapid technological advancements—the ability for stakeholders within industries ranging from healthcare through finance alike now lies squarely within hands poised toward innovation-driven solutions tackling ethical dilemmas head-on responsibly!

Legal tech innovators who proactively address AI’s contractual, regulatory, and ethical challenges will shape not only the future of AI law but also the evolution of legal technology itself. By creating tools that enhance AI contract negotiations, automate compliance tracking, and provide real-time regulatory updates, legal tech can empower businesses, law firms, and regulators to navigate AI’s complex legal landscape with confidence. As AI governance becomes a defining issue for global legal systems, those who develop solutions to facilitate fairness, accountability, and transparency in AI contracts will set new standards for responsible AI adoption. The legal industry now has a unique opportunity to lead the way in AI governance—and those innovating in legal tech will be the ones defining what that future looks like.

A note of appreciation to TermScout for allowing us limited, anonymized access to data from thousands of IT services agreements in their contract certification platform.